spikingjelly.activation_based.layer package

Module contents

- class spikingjelly.activation_based.layer.StepModeContainer(stateful: bool, *args)[源代码]

基类:

Sequential,StepModule

- class spikingjelly.activation_based.layer.Conv1d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int]], stride: Union[int, Tuple[int]] = 1, padding: Union[str, int, Tuple[int]] = 0, dilation: Union[int, Tuple[int]] = 1, groups: int = 1, bias: bool = True, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

Conv1d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Conv1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Conv1dfor other parameters’ API

- class spikingjelly.activation_based.layer.Conv2d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int, int]], stride: Union[int, Tuple[int, int]] = 1, padding: Union[str, int, Tuple[int, int]] = 0, dilation: Union[int, Tuple[int, int]] = 1, groups: int = 1, bias: bool = True, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

Conv2d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Conv2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Conv2dfor other parameters’ API

- class spikingjelly.activation_based.layer.Conv3d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int, int, int]], stride: Union[int, Tuple[int, int, int]] = 1, padding: Union[str, int, Tuple[int, int, int]] = 0, dilation: Union[int, Tuple[int, int, int]] = 1, groups: int = 1, bias: bool = True, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

Conv3d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Conv3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Conv3dfor other parameters’ API

- class spikingjelly.activation_based.layer.Upsample(size: Optional[Union[int, Tuple[int, ...]]] = None, scale_factor: Optional[Union[float, Tuple[float, ...]]] = None, mode: str = 'nearest', align_corners: Optional[bool] = None, recompute_scale_factor: Optional[bool] = None, step_mode: str = 's')[源代码]

基类:

Upsample,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Upsample- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Upsamplefor other parameters’ API

- class spikingjelly.activation_based.layer.ConvTranspose1d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int]], stride: Union[int, Tuple[int]] = 1, padding: Union[int, Tuple[int]] = 0, output_padding: Union[int, Tuple[int]] = 0, groups: int = 1, bias: bool = True, dilation: Union[int, Tuple[int]] = 1, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

ConvTranspose1d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.ConvTranspose1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.ConvTranspose1dfor other parameters’ API

- class spikingjelly.activation_based.layer.ConvTranspose2d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int, int]], stride: Union[int, Tuple[int, int]] = 1, padding: Union[int, Tuple[int, int]] = 0, output_padding: Union[int, Tuple[int, int]] = 0, groups: int = 1, bias: bool = True, dilation: int = 1, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

ConvTranspose2d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.ConvTranspose2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.ConvTranspose2dfor other parameters’ API

- class spikingjelly.activation_based.layer.ConvTranspose3d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int, int, int]], stride: Union[int, Tuple[int, int, int]] = 1, padding: Union[int, Tuple[int, int, int]] = 0, output_padding: Union[int, Tuple[int, int, int]] = 0, groups: int = 1, bias: bool = True, dilation: Union[int, Tuple[int, int, int]] = 1, padding_mode: str = 'zeros', step_mode: str = 's')[源代码]

基类:

ConvTranspose3d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.ConvTranspose3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.ConvTranspose3dfor other parameters’ API

- class spikingjelly.activation_based.layer.BatchNorm1d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

-

- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.BatchNorm1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.BatchNorm1dfor other parameters’ API

- class spikingjelly.activation_based.layer.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

-

- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.BatchNorm2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.BatchNorm2dfor other parameters’ API

- class spikingjelly.activation_based.layer.BatchNorm3d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

-

- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.BatchNorm3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.BatchNorm3dfor other parameters’ API

- class spikingjelly.activation_based.layer.GroupNorm(num_groups: int, num_channels: int, eps: float = 1e-05, affine: bool = True, step_mode='s')[源代码]

基类:

GroupNorm,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.GroupNorm- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.GroupNormfor other parameters’ API

- class spikingjelly.activation_based.layer.MaxPool1d(kernel_size: Union[int, Tuple[int]], stride: Optional[Union[int, Tuple[int]]] = None, padding: Union[int, Tuple[int]] = 0, dilation: Union[int, Tuple[int]] = 1, return_indices: bool = False, ceil_mode: bool = False, step_mode='s')[源代码]

基类:

MaxPool1d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.MaxPool1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.MaxPool1dfor other parameters’ API

- class spikingjelly.activation_based.layer.MaxPool2d(kernel_size: Union[int, Tuple[int, int]], stride: Optional[Union[int, Tuple[int, int]]] = None, padding: Union[int, Tuple[int, int]] = 0, dilation: Union[int, Tuple[int, int]] = 1, return_indices: bool = False, ceil_mode: bool = False, step_mode='s')[源代码]

基类:

MaxPool2d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.MaxPool2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.MaxPool2dfor other parameters’ API

- class spikingjelly.activation_based.layer.MaxPool3d(kernel_size: Union[int, Tuple[int, int, int]], stride: Optional[Union[int, Tuple[int, int, int]]] = None, padding: Union[int, Tuple[int, int, int]] = 0, dilation: Union[int, Tuple[int, int, int]] = 1, return_indices: bool = False, ceil_mode: bool = False, step_mode='s')[源代码]

基类:

MaxPool3d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.MaxPool3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.MaxPool3dfor other parameters’ API

- class spikingjelly.activation_based.layer.AvgPool1d(kernel_size: Union[int, Tuple[int]], stride: Optional[Union[int, Tuple[int]]] = None, padding: Union[int, Tuple[int]] = 0, ceil_mode: bool = False, count_include_pad: bool = True, step_mode='s')[源代码]

基类:

AvgPool1d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AvgPool1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AvgPool1dfor other parameters’ API

- class spikingjelly.activation_based.layer.AvgPool2d(kernel_size: Union[int, Tuple[int, int]], stride: Optional[Union[int, Tuple[int, int]]] = None, padding: Union[int, Tuple[int, int]] = 0, ceil_mode: bool = False, count_include_pad: bool = True, divisor_override: Optional[int] = None, step_mode='s')[源代码]

基类:

AvgPool2d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AvgPool2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AvgPool2dfor other parameters’ API

- class spikingjelly.activation_based.layer.AvgPool3d(kernel_size: Union[int, Tuple[int, int, int]], stride: Optional[Union[int, Tuple[int, int, int]]] = None, padding: Union[int, Tuple[int, int, int]] = 0, ceil_mode: bool = False, count_include_pad: bool = True, divisor_override: Optional[int] = None, step_mode='s')[源代码]

基类:

AvgPool3d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AvgPool3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AvgPool3dfor other parameters’ API

- class spikingjelly.activation_based.layer.AdaptiveAvgPool1d(output_size, step_mode='s')[源代码]

基类:

AdaptiveAvgPool1d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AdaptiveAvgPool1d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AdaptiveAvgPool1dfor other parameters’ API

- class spikingjelly.activation_based.layer.AdaptiveAvgPool2d(output_size, step_mode='s')[源代码]

基类:

AdaptiveAvgPool2d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AdaptiveAvgPool2d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AdaptiveAvgPool2dfor other parameters’ API

- class spikingjelly.activation_based.layer.AdaptiveAvgPool3d(output_size, step_mode='s')[源代码]

基类:

AdaptiveAvgPool3d,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.AdaptiveAvgPool3d- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.AdaptiveAvgPool3dfor other parameters’ API

- class spikingjelly.activation_based.layer.Linear(in_features: int, out_features: int, bias: bool = True, step_mode='s')[源代码]

基类:

Linear,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Linear- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Linearfor other parameters’ API

- class spikingjelly.activation_based.layer.Flatten(start_dim: int = 1, end_dim: int = -1, step_mode='s')[源代码]

基类:

Flatten,StepModule- 参数:

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

其他的参数API参见

torch.nn.Flatten- 参数:

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

Refer to

torch.nn.Flattenfor other parameters’ API

- class spikingjelly.activation_based.layer.NeuNorm(in_channels, height, width, k=0.9, shared_across_channels=False, step_mode='s')[源代码]

基类:

MemoryModule- 参数:

in_channels – 输入数据的通道数

height – 输入数据的宽

width – 输入数据的高

k – 动量项系数

shared_across_channels – 可学习的权重

w是否在通道这一维度上共享。设置为True可以大幅度节省内存step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

Direct Training for Spiking Neural Networks: Faster, Larger, Better 中提出的NeuNorm层。NeuNorm层必须放在二维卷积层后的脉冲神经元后,例如:

Conv2d -> LIF -> NeuNorm要求输入的尺寸是

[batch_size, in_channels, height, width]。in_channels是输入到NeuNorm层的通道数,也就是论文中的 \(F\)。k是动量项系数,相当于论文中的 \(k_{\tau 2}\)。论文中的 \(\frac{v}{F}\) 会根据 \(k_{\tau 2} + vF = 1\) 自动算出。

- 参数:

in_channels – channels of input

height – height of input

width – height of width

k – momentum factor

shared_across_channels – whether the learnable parameter

wis shared over channel dim. If setTrue, the consumption of memory can decrease largelystep_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

The NeuNorm layer is proposed in Direct Training for Spiking Neural Networks: Faster, Larger, Better.

It should be placed after spiking neurons behind convolution layer, e.g.,

Conv2d -> LIF -> NeuNormThe input should be a 4-D tensor with

shape = [batch_size, in_channels, height, width].in_channelsis the channels of input,which is \(F\) in the paper.kis the momentum factor,which is \(k_{\tau 2}\) in the paper.\(\frac{v}{F}\) will be calculated by \(k_{\tau 2} + vF = 1\) autonomously.

- class spikingjelly.activation_based.layer.Dropout(p=0.5, step_mode='s')[源代码]

基类:

MemoryModule与

torch.nn.Dropout的几乎相同。区别在于,在每一轮的仿真中,被设置成0的位置不会发生改变;直到下一轮运行,即网络调用reset()函数后,才会按照概率去重新决定,哪些位置被置0。小技巧

这种Dropout最早由 Enabling Spike-based Backpropagation for Training Deep Neural Network Architectures 一文进行详细论述:

There is a subtle difference in the way dropout is applied in SNNs compared to ANNs. In ANNs, each epoch of training has several iterations of mini-batches. In each iteration, randomly selected units (with dropout ratio of \(p\)) are disconnected from the network while weighting by its posterior probability (\(1-p\)). However, in SNNs, each iteration has more than one forward propagation depending on the time length of the spike train. We back-propagate the output error and modify the network parameters only at the last time step. For dropout to be effective in our training method, it has to be ensured that the set of connected units within an iteration of mini-batch data is not changed, such that the neural network is constituted by the same random subset of units during each forward propagation within a single iteration. On the other hand, if the units are randomly connected at each time-step, the effect of dropout will be averaged out over the entire forward propagation time within an iteration. Then, the dropout effect would fade-out once the output error is propagated backward and the parameters are updated at the last time step. Therefore, we need to keep the set of randomly connected units for the entire time window within an iteration.

- 参数:

This layer is almost same with

torch.nn.Dropout. The difference is that elements have been zeroed at first step during a simulation will always be zero. The indexes of zeroed elements will be update only afterreset()has been called and a new simulation is started.Tip

This kind of Dropout is firstly described in Enabling Spike-based Backpropagation for Training Deep Neural Network Architectures:

There is a subtle difference in the way dropout is applied in SNNs compared to ANNs. In ANNs, each epoch of training has several iterations of mini-batches. In each iteration, randomly selected units (with dropout ratio of \(p\)) are disconnected from the network while weighting by its posterior probability (\(1-p\)). However, in SNNs, each iteration has more than one forward propagation depending on the time length of the spike train. We back-propagate the output error and modify the network parameters only at the last time step. For dropout to be effective in our training method, it has to be ensured that the set of connected units within an iteration of mini-batch data is not changed, such that the neural network is constituted by the same random subset of units during each forward propagation within a single iteration. On the other hand, if the units are randomly connected at each time-step, the effect of dropout will be averaged out over the entire forward propagation time within an iteration. Then, the dropout effect would fade-out once the output error is propagated backward and the parameters are updated at the last time step. Therefore, we need to keep the set of randomly connected units for the entire time window within an iteration.

- class spikingjelly.activation_based.layer.Dropout2d(p=0.2, step_mode='s')[源代码]

基类:

Dropout与

torch.nn.Dropout2d的几乎相同。区别在于,在每一轮的仿真中,被设置成0的位置不会发生改变;直到下一轮运行,即网络调用reset()函数后,才会按照概率去重新决定,哪些位置被置0。关于SNN中Dropout的更多信息,参见 layer.Dropout。

- 参数:

This layer is almost same with

torch.nn.Dropout2d. The difference is that elements have been zeroed at first step during a simulation will always be zero. The indexes of zeroed elements will be update only afterreset()has been called and a new simulation is started.For more information about Dropout in SNN, refer to layer.Dropout.

- class spikingjelly.activation_based.layer.SynapseFilter(tau=100.0, learnable=False, step_mode='s')[源代码]

基类:

MemoryModule- 参数:

tau – time 突触上电流衰减的时间常数

learnable – 时间常数在训练过程中是否是可学习的。若为

True,则tau会被设定成时间常数的初始值step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

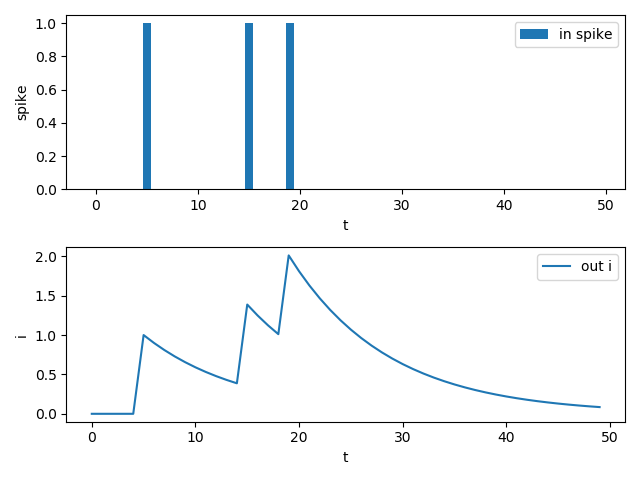

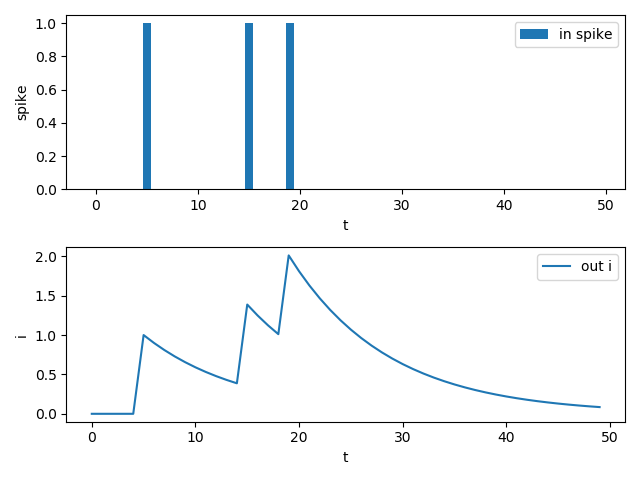

具有滤波性质的突触。突触的输出电流满足,当没有脉冲输入时,输出电流指数衰减:

\[\tau \frac{\mathrm{d} I(t)}{\mathrm{d} t} = - I(t)\]当有新脉冲输入时,输出电流自增1:

\[I(t) = I(t) + 1\]记输入脉冲为 \(S(t)\),则离散化后,统一的电流更新方程为:

\[I(t) = I(t-1) - (1 - S(t)) \frac{1}{\tau} I(t-1) + S(t)\]这种突触能将输入脉冲进行平滑,简单的示例代码和输出结果:

T = 50 in_spikes = (torch.rand(size=[T]) >= 0.95).float() lp_syn = LowPassSynapse(tau=10.0) pyplot.subplot(2, 1, 1) pyplot.bar(torch.arange(0, T).tolist(), in_spikes, label='in spike') pyplot.xlabel('t') pyplot.ylabel('spike') pyplot.legend() out_i = [] for i in range(T): out_i.append(lp_syn(in_spikes[i])) pyplot.subplot(2, 1, 2) pyplot.plot(out_i, label='out i') pyplot.xlabel('t') pyplot.ylabel('i') pyplot.legend() pyplot.show()

输出电流不仅取决于当前时刻的输入,还取决于之前的输入,使得该突触具有了一定的记忆能力。

这种突触偶有使用,例如:

Unsupervised learning of digit recognition using spike-timing-dependent plasticity

另一种视角是将其视为一种输入为脉冲,并输出其电压的LIF神经元。并且该神经元的发放阈值为 \(+\infty\) 。

神经元最后累计的电压值一定程度上反映了该神经元在整个仿真过程中接收脉冲的数量,从而替代了传统的直接对输出脉冲计数(即发放频率)来表示神经元活跃程度的方法。因此通常用于最后一层,在以下文章中使用:

Enabling spike-based backpropagation for training deep neural network architectures

- 参数:

tau – time constant that determines the decay rate of current in the synapse

learnable – whether time constant is learnable during training. If

True, thentauwill be the initial value of time constantstep_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

The synapse filter that can filter input current. The output current will decay when there is no input spike:

\[\tau \frac{\mathrm{d} I(t)}{\mathrm{d} t} = - I(t)\]The output current will increase 1 when there is a new input spike:

\[I(t) = I(t) + 1\]Denote the input spike as \(S(t)\), then the discrete current update equation is as followed:

\[I(t) = I(t-1) - (1 - S(t)) \frac{1}{\tau} I(t-1) + S(t)\]This synapse can smooth input. Here is the example and output:

T = 50 in_spikes = (torch.rand(size=[T]) >= 0.95).float() lp_syn = LowPassSynapse(tau=10.0) pyplot.subplot(2, 1, 1) pyplot.bar(torch.arange(0, T).tolist(), in_spikes, label='in spike') pyplot.xlabel('t') pyplot.ylabel('spike') pyplot.legend() out_i = [] for i in range(T): out_i.append(lp_syn(in_spikes[i])) pyplot.subplot(2, 1, 2) pyplot.plot(out_i, label='out i') pyplot.xlabel('t') pyplot.ylabel('i') pyplot.legend() pyplot.show()

The output current is not only determined by the present input but also by the previous input, which makes this synapse have memory.

This synapse is sometimes used, e.g.:

Unsupervised learning of digit recognition using spike-timing-dependent plasticity

Another view is regarding this synapse as a LIF neuron with a \(+\infty\) threshold voltage.

The final output of this synapse (or the final voltage of this LIF neuron) represents the accumulation of input spikes, which substitute for traditional firing rate that indicates the excitatory level. So, it can be used in the last layer of the network, e.g.:

Enabling spike-based backpropagation for training deep neural network architectures

- class spikingjelly.activation_based.layer.DropConnectLinear(in_features: int, out_features: int, bias: bool = True, p: float = 0.5, samples_num: int = 1024, invariant: bool = False, activation: Module = ReLU(), state_mode='s')[源代码]

基类:

MemoryModule- 参数:

in_features (int) – 每个输入样本的特征数

out_features (int) – 每个输出样本的特征数

bias (bool) – 若为

False,则本层不会有可学习的偏置项。 默认为Truep (float) – 每个连接被断开的概率。默认为0.5

samples_num (int) – 在推理时,从高斯分布中采样的数据数量。默认为1024

invariant (bool) – 若为

True,线性层会在第一次执行前向传播时被按概率断开,断开后的线性层会保持不变,直到reset()函数 被调用,线性层恢复为完全连接的状态。完全连接的线性层,调用reset()函数后的第一次前向传播时被重新按概率断开。 若为False,在每一次前向传播时线性层都会被重新完全连接再按概率断开。 阅读 layer.Dropout 以 获得更多关于此参数的信息。 默认为Falseactivation (None or nn.Module) – 在线性层后的激活层

step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

DropConnect,由 Regularization of Neural Networks using DropConnect 一文提出。DropConnect与Dropout非常类似,区别在于DropConnect是以概率

p断开连接,而Dropout是将输入以概率置0。备注

在使用DropConnect进行推理时,输出的tensor中的每个元素,都是先从高斯分布中采样,通过激活层激活,再在采样数量上进行平均得到的。 详细的流程可以在 Regularization of Neural Networks using DropConnect 一文中的 Algorithm 2 找到。激活层

activation在中间的步骤起作用,因此我们将其作为模块的成员。- 参数:

in_features (int) – size of each input sample

out_features (int) – size of each output sample

bias (bool) – If set to

False, the layer will not learn an additive bias. Default:Truep (float) – probability of an connection to be zeroed. Default: 0.5

samples_num (int) – number of samples drawn from the Gaussian during inference. Default: 1024

invariant (bool) – If set to

True, the connections will be dropped at the first time of forward and the dropped connections will remain unchanged untilreset()is called and the connections recovery to fully-connected status. Then the connections will be re-dropped at the first time of forward afterreset(). If set toFalse, the connections will be re-dropped at every forward. See layer.Dropout for more information to understand this parameter. Default:Falseactivation (None or nn.Module) – the activation layer after the linear layer

step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

DropConnect, which is proposed by Regularization of Neural Networks using DropConnect, is similar with Dropout but drop connections of a linear layer rather than the elements of the input tensor with probability

p.Note

When inference with DropConnect, every elements of the output tensor are sampled from a Gaussian distribution, activated by the activation layer and averaged over the sample number

samples_num. See Algorithm 2 in Regularization of Neural Networks using DropConnect for more details. Note that activation is an intermediate process. This is the reason why we includeactivationas a member variable of this module.- reset_parameters() None[源代码]

-

- 返回:

None

- 返回类型:

None

初始化模型中的可学习参数。

- 返回:

None

- 返回类型:

None

Initialize the learnable parameters of this module.

- class spikingjelly.activation_based.layer.PrintShapeModule(ext_str='PrintShapeModule')[源代码]

基类:

Module- 参数:

ext_str (str) – 额外打印的字符串

只打印

ext_str和输入的shape,不进行任何操作的网络层,可以用于debug。- 参数:

ext_str (str) – extra strings for printing

This layer will not do any operation but print

ext_strand the shape of input, which can be used for debugging.

- class spikingjelly.activation_based.layer.ElementWiseRecurrentContainer(sub_module: Module, element_wise_function: Callable, step_mode='s')[源代码]

基类:

MemoryModule- 参数:

sub_module (torch.nn.Module) – 被包含的模块

element_wise_function (Callable) – 用户自定义的逐元素函数,应该形如

z=f(x, y)step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

使用逐元素运算的自连接包装器。记

sub_module的输入输出为 \(i[t]\) 和 \(y[t]\) (注意 \(y[t]\) 也是整个模块的输出), 整个模块的输入为 \(x[t]\),则\[i[t] = f(x[t], y[t-1])\]其中 \(f\) 是用户自定义的逐元素函数。我们默认 \(y[-1] = 0\)。

备注

sub_module输入和输出的尺寸需要相同。示例代码:

T = 8 net = ElementWiseRecurrentContainer(neuron.IFNode(v_reset=None), element_wise_function=lambda x, y: x + y) print(net) x = torch.zeros([T]) x[0] = 1.5 for t in range(T): print(t, f'x[t]={x[t]}, s[t]={net(x[t])}') functional.reset_net(net)

- 参数:

sub_module (torch.nn.Module) – the contained module

element_wise_function (Callable) – the user-defined element-wise function, which should have the format

z=f(x, y)step_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

A container that use a element-wise recurrent connection. Denote the inputs and outputs of

sub_moduleas \(i[t]\) and \(y[t]\) (Note that \(y[t]\) is also the outputs of this module), and the inputs of this module as \(x[t]\), then\[i[t] = f(x[t], y[t-1])\]where \(f\) is the user-defined element-wise function. We set \(y[-1] = 0\).

Note

The shape of inputs and outputs of

sub_modulemust be the same.Codes example:

T = 8 net = ElementWiseRecurrentContainer(neuron.IFNode(v_reset=None), element_wise_function=lambda x, y: x + y) print(net) x = torch.zeros([T]) x[0] = 1.5 for t in range(T): print(t, f'x[t]={x[t]}, s[t]={net(x[t])}') functional.reset_net(net)

- class spikingjelly.activation_based.layer.LinearRecurrentContainer(sub_module: Module, in_features: int, out_features: int, bias: bool = True, step_mode='s')[源代码]

基类:

MemoryModule- 参数:

sub_module (torch.nn.Module) – 被包含的模块

in_features (int) – 输入的特征数量

out_features (int) – 输出的特征数量

bias (bool) – 若为

False,则线性自连接不会带有可学习的偏执项step_mode (str) – 步进模式,可以为 ‘s’ (单步) 或 ‘m’ (多步)

使用线性层的自连接包装器。记

sub_module的输入和输出为 \(i[t]\) 和 \(y[t]\) (注意 \(y[t]\) 也是整个模块的输出), 整个模块的输入记作 \(x[t]\) ,则\[\begin{split}i[t] = \begin{pmatrix} x[t] \\ y[t-1]\end{pmatrix} W^{T} + b\end{split}\]其中 \(W, b\) 是线性层的权重和偏置项。默认 \(y[-1] = 0\)。

\(x[t]\) 应该

shape = [N, *, in_features],\(y[t]\) 则应该shape = [N, *, out_features]。备注

自连接是由

torch.nn.Linear(in_features + out_features, in_features, bias)实现的。in_features = 4 out_features = 2 T = 8 N = 2 net = LinearRecurrentContainer( nn.Sequential( nn.Linear(in_features, out_features), neuron.LIFNode(), ), in_features, out_features) print(net) x = torch.rand([T, N, in_features]) for t in range(T): print(t, net(x[t])) functional.reset_net(net)

- 参数:

sub_module (torch.nn.Module) – the contained module

in_features (int) – size of each input sample

out_features (int) – size of each output sample

bias (bool) – If set to

False, the linear recurrent layer will not learn an additive biasstep_mode (str) – the step mode, which can be s (single-step) or m (multi-step)

A container that use a linear recurrent connection. Denote the inputs and outputs of

sub_moduleas \(i[t]\) and \(y[t]\) (Note that \(y[t]\) is also the outputs of this module), and the inputs of this module as \(x[t]\), then\[\begin{split}i[t] = \begin{pmatrix} x[t] \\ y[t-1]\end{pmatrix} W^{T} + b\end{split}\]where \(W, b\) are the weight and bias of the linear connection. We set \(y[-1] = 0\).

\(x[t]\) should have the shape

[N, *, in_features], and \(y[t]\) has the shape[N, *, out_features].Note

The recurrent connection is implement by

torch.nn.Linear(in_features + out_features, in_features, bias).in_features = 4 out_features = 2 T = 8 N = 2 net = LinearRecurrentContainer( nn.Sequential( nn.Linear(in_features, out_features), neuron.LIFNode(), ), in_features, out_features) print(net) x = torch.rand([T, N, in_features]) for t in range(T): print(t, net(x[t])) functional.reset_net(net)

- class spikingjelly.activation_based.layer.ThresholdDependentBatchNorm1d(alpha: float, v_th: float, *args, **kwargs)[源代码]

基类:

_ThresholdDependentBatchNormBase*args, **kwargs中的参数与torch.nn.BatchNorm1d的参数相同。Going Deeper With Directly-Trained Larger Spiking Neural Networks 一文提出 的Threshold-Dependent Batch Normalization (tdBN)。

- 参数:

Other parameters in

*args, **kwargsare same with those oftorch.nn.BatchNorm1d.The Threshold-Dependent Batch Normalization (tdBN) proposed in Going Deeper With Directly-Trained Larger Spiking Neural Networks.

- class spikingjelly.activation_based.layer.ThresholdDependentBatchNorm2d(alpha: float, v_th: float, *args, **kwargs)[源代码]

基类:

_ThresholdDependentBatchNormBase*args, **kwargs中的参数与torch.nn.BatchNorm2d的参数相同。Going Deeper With Directly-Trained Larger Spiking Neural Networks 一文提出 的Threshold-Dependent Batch Normalization (tdBN)。

- 参数:

Other parameters in

*args, **kwargsare same with those oftorch.nn.BatchNorm2d.The Threshold-Dependent Batch Normalization (tdBN) proposed in Going Deeper With Directly-Trained Larger Spiking Neural Networks.

- class spikingjelly.activation_based.layer.ThresholdDependentBatchNorm3d(alpha: float, v_th: float, *args, **kwargs)[源代码]

基类:

_ThresholdDependentBatchNormBase*args, **kwargs中的参数与torch.nn.BatchNorm3d的参数相同。Going Deeper With Directly-Trained Larger Spiking Neural Networks 一文提出 的Threshold-Dependent Batch Normalization (tdBN)。

- 参数:

Other parameters in

*args, **kwargsare same with those oftorch.nn.BatchNorm3d.The Threshold-Dependent Batch Normalization (tdBN) proposed in Going Deeper With Directly-Trained Larger Spiking Neural Networks.

- class spikingjelly.activation_based.layer.TemporalWiseAttention(T: int, reduction: int = 16, dimension: int = 4)[源代码]

-

- 参数:

T – 输入数据的时间步长

reduction – 压缩比

dimension – 输入数据的维度。当输入数据为[T, N, C, H, W]时, dimension = 4;输入数据维度为[T, N, L]时,dimension = 2。

Temporal-Wise Attention Spiking Neural Networks for Event Streams Classification 中提出 的MultiStepTemporalWiseAttention层。MultiStepTemporalWiseAttention层必须放在二维卷积层之后脉冲神经元之前,例如:

Conv2d -> MultiStepTemporalWiseAttention -> LIF输入的尺寸是

[T, N, C, H, W]或者[T, N, L],经过MultiStepTemporalWiseAttention层,输出为[T, N, C, H, W]或者[T, N, L]。reduction是压缩比,相当于论文中的 \(r\)。- 参数:

T – timewindows of input

reduction – reduction ratio

dimension – Dimensions of input. If the input dimension is [T, N, C, H, W], dimension = 4; when the input dimension is [T, N, L], dimension = 2.

The MultiStepTemporalWiseAttention layer is proposed in Temporal-Wise Attention Spiking Neural Networks for Event Streams Classification.

It should be placed after the convolution layer and before the spiking neurons, e.g.,

Conv2d -> MultiStepTemporalWiseAttention -> LIFThe dimension of the input is

[T, N, C, H, W]or[T, N, L], after the MultiStepTemporalWiseAttention layer, the output dimension is[T, N, C, H, W]or[T, N, L].reductionis the reduction ratio,which is \(r\) in the paper.

- class spikingjelly.activation_based.layer.MultiDimensionalAttention(T: int, C: int, reduction_t: int = 16, reduction_c: int = 16, kernel_size=3)[源代码]

-

- 参数:

T – 输入数据的时间步长

C – 输入数据的通道数

reduction_t – 时间压缩比

reduction_c – 通道压缩比

kernel_size – 空间注意力机制的卷积核大小

Attention Spiking Neural Networks 中提出 的MA-SNN模型以及MultiStepMultiDimensionalAttention层。

您可以从以下链接中找到MA-SNN的示例项目: - https://github.com/MA-SNN/MA-SNN - https://github.com/ridgerchu/SNN_Attention_VGG

输入的尺寸是

[T, N, C, H, W],经过MultiStepMultiDimensionalAttention层,输出为[T, N, C, H, W]。- 参数:

T – timewindows of input

C – channel number of input

reduction_t – temporal reduction ratio

reduction_c – channel reduction ratio

kernel_size – convolution kernel size of SpatialAttention

The MA-SNN model and MultiStepMultiDimensionalAttention layer are proposed in ``Attention Spiking Neural Networks <https://ieeexplore.ieee.org/document/10032591>`_.

You can find the example projects of MA-SNN in the following links: - https://github.com/MA-SNN/MA-SNN - https://github.com/ridgerchu/SNN_Attention_VGG

The dimension of the input is

[T, N, C, H, W], after the MultiStepMultiDimensionalAttention layer, the output dimension is[T, N, C, H, W].

- class spikingjelly.activation_based.layer.VotingLayer(voting_size: int = 10, step_mode='s')[源代码]

基类:

Module,StepModule投票层,对

shape = [..., C * voting_size]的输入在最后一维上做kernel_size = voting_size, stride = voting_size的平均池化- 参数:

Applies average pooling with

kernel_size = voting_size, stride = voting_sizeon the last dimension of the input withshape = [..., C * voting_size]

- class spikingjelly.activation_based.layer.Delay(delay_steps: int, step_mode='s')[源代码]

基类:

MemoryModule延迟层,可以用来延迟输入,使得

y[t] = x[t - delay_steps]。缺失的数据用0填充。代码示例:

delay_layer = Delay(delay=1, step_mode='m') x = torch.rand([5, 2]) x[3:].zero_() x.requires_grad = True y = delay_layer(x) print('x=') print(x) print('y=') print(y) y.sum().backward() print('x.grad=') print(x.grad)

输出为:

x= tensor([[0.2510, 0.7246], [0.5303, 0.3160], [0.2531, 0.5961], [0.0000, 0.0000], [0.0000, 0.0000]], requires_grad=True) y= tensor([[0.0000, 0.0000], [0.2510, 0.7246], [0.5303, 0.3160], [0.2531, 0.5961], [0.0000, 0.0000]], grad_fn=<CatBackward0>) x.grad= tensor([[1., 1.], [1., 1.], [1., 1.], [1., 1.], [0., 0.]])

- 参数:

A delay layer that can delay inputs and makes

y[t] = x[t - delay_steps]. The nonexistent data will be regarded as 0.Codes example:

delay_layer = Delay(delay=1, step_mode='m') x = torch.rand([5, 2]) x[3:].zero_() x.requires_grad = True y = delay_layer(x) print('x=') print(x) print('y=') print(y) y.sum().backward() print('x.grad=') print(x.grad)

The outputs are:

x= tensor([[0.2510, 0.7246], [0.5303, 0.3160], [0.2531, 0.5961], [0.0000, 0.0000], [0.0000, 0.0000]], requires_grad=True) y= tensor([[0.0000, 0.0000], [0.2510, 0.7246], [0.5303, 0.3160], [0.2531, 0.5961], [0.0000, 0.0000]], grad_fn=<CatBackward0>) x.grad= tensor([[1., 1.], [1., 1.], [1., 1.], [1., 1.], [0., 0.]])

- property delay_steps

- class spikingjelly.activation_based.layer.TemporalEffectiveBatchNormNd(T: int, num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

基类:

MemoryModule- bn_instance

_BatchNorm的别名

- class spikingjelly.activation_based.layer.TemporalEffectiveBatchNorm1d(T: int, num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

基类:

TemporalEffectiveBatchNormNd- 参数:

T (int) – 总时间步数

其他参数的API参见

BatchNorm1dTemporal Effective Batch Normalization in Spiking Neural Networks 一文提出的Temporal Effective Batch Normalization (TEBN)。

TEBN给每个时刻的输出增加一个缩放。若普通的BN在

t时刻的输出是y[t],则TEBN的输出为k[t] * y[t],其中k[t]是可 学习的参数。- 参数:

T (int) – the number of time-steps

Refer to

BatchNorm1dfor other parameters’ APITemporal Effective Batch Normalization (TEBN) proposed by Temporal Effective Batch Normalization in Spiking Neural Networks.

TEBN adds a scale on outputs of each time-step from the native BN. Denote the output at time-step

tof the native BN asy[t], then the output of TEBN isk[t] * y[t], wherek[t]is the learnable scale.- bn_instance

BatchNorm1d的别名

- class spikingjelly.activation_based.layer.TemporalEffectiveBatchNorm2d(T: int, num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

基类:

TemporalEffectiveBatchNormNd- 参数:

T (int) – 总时间步数

其他参数的API参见

BatchNorm2dTemporal Effective Batch Normalization in Spiking Neural Networks 一文提出的Temporal Effective Batch Normalization (TEBN)。

TEBN给每个时刻的输出增加一个缩放。若普通的BN在

t时刻的输出是y[t],则TEBN的输出为k[t] * y[t],其中k[t]是可 学习的参数。- 参数:

T (int) – the number of time-steps

Refer to

BatchNorm2dfor other parameters’ APITemporal Effective Batch Normalization (TEBN) proposed by Temporal Effective Batch Normalization in Spiking Neural Networks.

TEBN adds a scale on outputs of each time-step from the native BN. Denote the output at time-step

tof the native BN asy[t], then the output of TEBN isk[t] * y[t], wherek[t]is the learnable scale.- bn_instance

BatchNorm2d的别名

- class spikingjelly.activation_based.layer.TemporalEffectiveBatchNorm3d(T: int, num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True, step_mode='s')[源代码]

基类:

TemporalEffectiveBatchNormNd- 参数:

T (int) – 总时间步数

其他参数的API参见

BatchNorm3dTemporal Effective Batch Normalization in Spiking Neural Networks 一文提出的Temporal Effective Batch Normalization (TEBN)。

TEBN给每个时刻的输出增加一个缩放。若普通的BN在

t时刻的输出是y[t],则TEBN的输出为k[t] * y[t],其中k[t]是可 学习的参数。- 参数:

T (int) – the number of time-steps

Refer to

BatchNorm3dfor other parameters’ APITemporal Effective Batch Normalization (TEBN) proposed by Temporal Effective Batch Normalization in Spiking Neural Networks.

TEBN adds a scale on outputs of each time-step from the native BN. Denote the output at time-step

tof the native BN asy[t], then the output of TEBN isk[t] * y[t], wherek[t]is the learnable scale.- bn_instance

BatchNorm3d的别名

- class spikingjelly.activation_based.layer.GradwithTrace(module)[源代码]

基类:

Module- 参数:

module – 需要包装的模块

用于随时间在线训练时,根据神经元的迹计算梯度 出处:’Online Training Through Time for Spiking Neural Networks <https://openreview.net/forum?id=Siv3nHYHheI>’

- 参数:

module – the module that requires wrapping

Used for online training through time, calculate gradients by the traces of neurons Reference: ‘Online Training Through Time for Spiking Neural Networks <https://openreview.net/forum?id=Siv3nHYHheI>’

- class spikingjelly.activation_based.layer.SpikeTraceOp(module)[源代码]

基类:

Module- 参数:

module – 需要包装的模块

对脉冲和迹进行相同的运算,如Dropout,AvgPool等

- 参数:

module – the module that requires wrapping

perform the same operations for spike and trace, such as Dropout, Avgpool, etc.

- class spikingjelly.activation_based.layer.OTTTSequential(*args)[源代码]

基类:

Sequential

- class spikingjelly.activation_based.layer.WSConv2d(in_channels: int, out_channels: int, kernel_size: Union[int, Tuple[int, int]], stride: Union[int, Tuple[int, int]] = 1, padding: Union[str, int, Tuple[int, int]] = 0, dilation: Union[int, Tuple[int, int]] = 1, groups: int = 1, bias: bool = True, padding_mode: str = 'zeros', step_mode: str = 's', gain: bool = True, eps: float = 0.0001)[源代码]

基类:

Conv2d其他的参数API参见

Conv2d- 参数:

gain – whether introduce learnable scale factors for weights

eps (float) – a small number to prevent numerical problems

Refer to

Conv2dfor other parameters’ API

- class spikingjelly.activation_based.layer.WSLinear(in_features: int, out_features: int, bias: bool = True, step_mode='s', gain=True, eps=0.0001)[源代码]

基类:

Linear其他的参数API参见

Linear- 参数:

gain – whether introduce learnable scale factors for weights

eps (float) – a small number to prevent numerical problems

Refer to

Linearfor other parameters’ API